Unpacking the AI App Store: OpenAI's GPTs vs. Anthropic's MCPs

How Developers and Businesses Can Navigate the Emerging Ecosystem of AI Agents and Integrated Tools – In a 3-Minute Read

The Gist:

OpenAI, with its user-friendly Custom GPTs, and Anthropic, with its developer-focused Model Context Protocol (MCP), are in a battle to define how we interact with AI. This isn't just about chatbots; it's a struggle for dominance over the emerging AI app store ecosystem – a future built for AI that can do, not just say.

What Needs to be Understood:

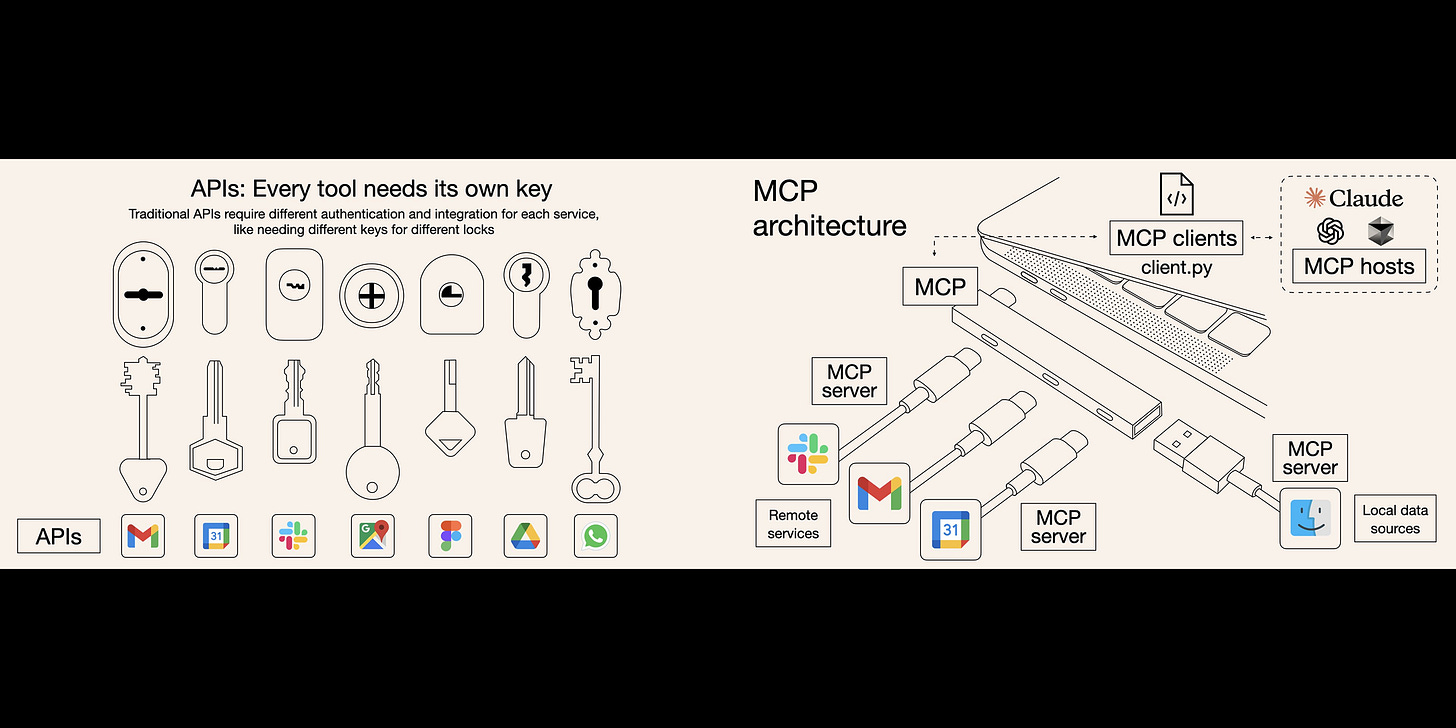

Standards Enable Communication: Just as web standards (like HTTP) allow websites to interact, AI needs standards to connect different systems.

The Evolution of AI Interaction:

Phase 1, LLMs Alone: Powerful, but limited to their training data – like brains in a jar.

Phase 2, LLMs + Tools: Adding basic tool use (like searching the web) expands capabilities, but integration is often clunky.

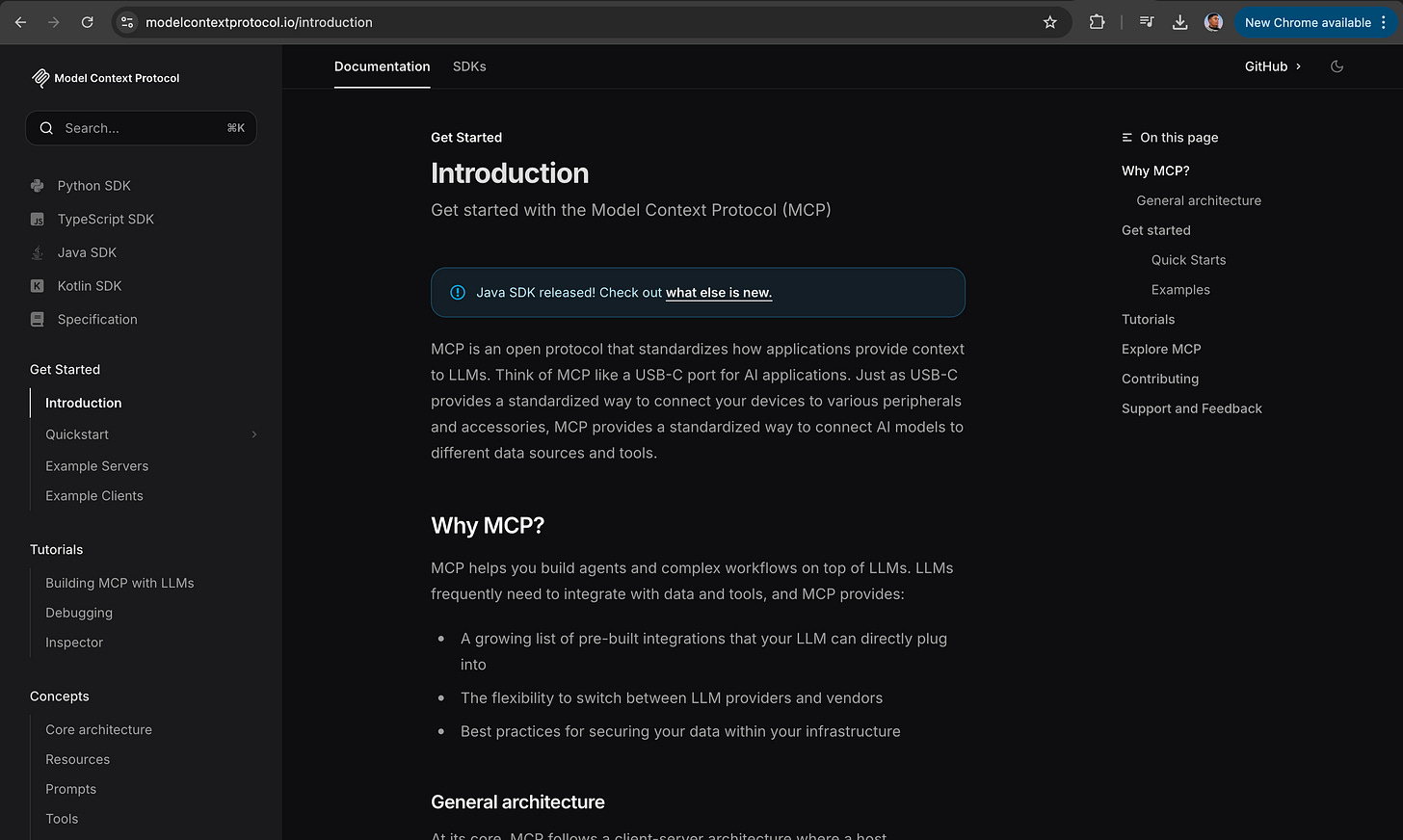

Phase 3, LLMs + MCPs: A standardized way for LLMs to seamlessly connect to any tool or data source, unlocking true agentic potential.

Models are Only as Good as Their Context: Both Custom GPTs and MCPs aim to give AI models the context they need to be truly useful. The difference lies in how they provide that context.

Containment vs. Interoperability:

Custom GPTs: Focus on packaging prompts and knowledge into self-contained "mini-apps." Think of them as specialized assistants for specific tasks.

MCPs: Focus on connecting AI agents to external tools and data sources. Think of them as the "plumbing" that enables AI to interact with the real world.

The Client-Server Model (MCP) - In Action:

Observations:

Early Adoption: Custom GPTs (3+ million created) have resonated with everyday users. MCP is gaining traction with developers/enterprises building complex AI systems (600+ MCP servers and growing).

Two Different Paths: OpenAI's revenue is dominated by ChatGPT subscriptions (~$2.7B annualized, 73% of revenue). Anthropic's is weighted towards API usage (60-75% from APIs, contributing to a $1B annualized run rate).

The Rise of "Agentic" Computing: MCP's arrival is perfectly timed with the rise of AI agents accessing computers and digital tools in much the same way us humans do.

Expanding AI's Capabilities: MCP isn't just about connecting to tools; it's about fundamentally expanding what AI can do.

Something to Think About:

The Upskilling Imperative: How might you collaborate with AI agents to augment your work – making it easier, faster, better? What new skills will be most valuable?

Where's The Money?: Where will the financial opportunities emerge in the MCP ecosystem? Creating/selling MCP servers? Building specialized agents? Providing integration services?

Learning the Ropes: Do you understand the basics of connecting an MCP server to an AI workstation (like ChatGPT or Claude)? Are you prepared?

With MCPs you can give your AI the tools, resources, and prompts it needs to complete your tasks:

You can connect multiple MCP servers to your AI and it can use the different tools together to complete the task:

How to use MCP with Cursor:

Developers can learn how to build their first MCP server here:

Different servers you can start integrating and using now:

A framework for building AI Agents with MCP using Python:

Building Agents with Model Context Protocol - Full Workshop with Mahesh Murag of Anthropic: